Cat Cam

After getting a cat in the summer of 2019 - I decided my girlfriend and I needed a way to check in on the apartment and see what the kitty was up to.

Looking at all the advances in fast object detection neural networks over the last few years I figured I could hack together an open source version of a Google Nest with streaming and object detection emails built in. Hopefully with less creepy privacy invasion and a bit more Python/Linux CLI learned on my part!

Our cat Winnie looking at who knows what

Our cat Winnie looking at who knows what

Gratuitous motion detector demo shot

Gratuitous motion detector demo shot

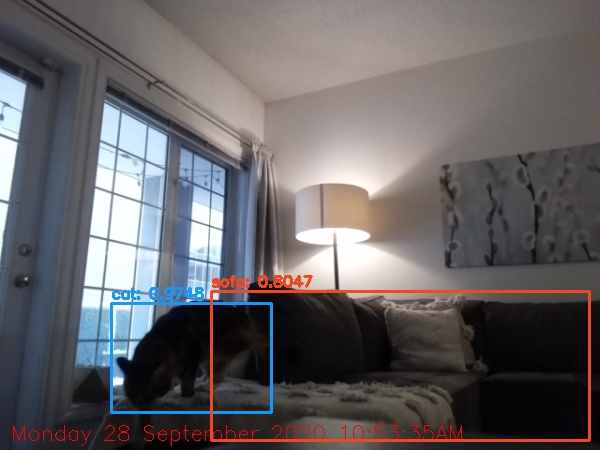

Object detections from a YOLOV3 neural net

Object detections from a YOLOV3 neural net

I’m pretty pleased with the final result - the web interface can be accessed from outside the local Wifi network to check in on the real time feed and any object detections are emailed from a configured Gmail account for detections of certain types. Motion detection triggers the object detection network run. Email notifications are also controlled with some primitive switches from the web interface - this prevents the Pi running the object detection network needlessly when we are around all the time (a.k.a Covid lockdown).

This will be a full run through to get this up and running with password access from outside the local Wifi.

TL;DR

Clone the repo, setup a couple Supervisor tasks and do some port forwarding to stream outside your network.

Contents

- Setup

- Get The Repo and Setup Virtualenv

- Install Darknet, Download YOLOV3 Files

- Configure and Test the Flask Server Locally

- Production with Gunicorn and Nginx

- Security

- Setup Static IP on Raspberry Pi/ Router IP Address Reservation

- IP Reservation + Port Forwarding from Router

- Next Steps

Setup

The object detection network we’ll be using requires a decent bit of RAM and moderate CPU so a 4 GB RAM Raspberry Pi 4 is recommended. This plus a webcam of your choice - the Raspberry Pi Camera module V2 is what I went with to have decent resolution but for Cat-Cam any basic USB webcam will work. Follow the standard instructions to make sure the Raspberry Pi camera module works.

All steps are written assuming you are working over SSH on your Raspberry Pi. I personally use remote development through VS Code and find it pretty intuitive. Beats my previous attempts at editing large code files through my Git Bash window. If SSH is foreign sounding - follow the guide here to get it setup.

This guide is written assuming Raspbian Buster is the OS on the Raspberry Pi.

Get The Repo and Setup Virtualenv

$ git clone https://github.com/dbandrews/cat-cam.git

$ cd cat-cam

Setup a virtualenv to isolate the Cat-Cam environment once running for durability. Use virtualenvwrapper to keep envs in the same place.

Project will work with Python 3.5 -> Python 3.8

$ pip3 install virtualenv

$ pip3 install virtualenvwrapper

We then need to add a few lines to our shell startup to link to where the envs will live. If editing directly on the Pi use:

$ sudo nano ~/.bash_rc

And add the following lines, configured to where you want your envs to sit.

export WORKON_HOME=$HOME/.virtualenvs

export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

source /usr/local/bin/virtualenvwrapper.sh

Save and close the file (Ctrl + X, Y to save, then “Enter” to use existing file name). Source the file to utilize the changes.

$ source ~/.bash_rc

$ mkvirtualenv cv

$ workon cv

$ pip install -r requirements.txt

Now we should have the Python environment all setup!

Install Darknet, Download YOLOV3 Files

Next let’s go get Darknet and YOLO - these aren’t a Satanic cult and millenial reference, they are actually a C framework for building neural networks and a specific architecture of convolutional neural network. YOLO (You Only Look Once) is known for being a fast object detection neural network architecture as it only executes one forward pass of the network to detect objects. YOLO 1-3 architectures are created by the original author of the network, with the most recent V4 being put out by another group. Today we’ll setup using YOLOV3 as YoloV4 doesn’t play nice with OpenCV in Python yet. The original Darknet and YOLO site is well worth a read.

Source: https://pjreddie.com/darknet/

Source: https://pjreddie.com/darknet/

Previous approaches to object detection had a window sliding over an image and tracking objects detected using standard image classifiers. Needless to say, we want the lighter weight workload when running on the Raspberry Pi.

In the parent folder of Cat-Cam run:

$ git clone https://github.com/AlexeyAB/darknet.git

$ cd darknet

$ make

Now we need to grab the pre trained weights for YOLOV3 into the darknet/cfg folder:

$ cd cfg

$ wget https://pjreddie.com/media/files/YOLOV3.weights

Confirm the coco.names file is also in the cfg folder. This allows us to get the labels of the integer ids that YOLO will return when it detects different classes of objects.

Test the build from within the darknet directory using:

$ ./darknet detector test cfg/coco.data cfg/YOLOV3.cfg cfg/YOLOV3.weights \

-thresh 0.25 data/dog.jpg

There should be a large amount of data flying by specifying layer geometry and then it will eventually output:

I’m not concerned with near real time object detection so I was okay with run times in the 4-12 seconds range. This could be improved by building OpenCV from source (instead of just pip install) and then using the OPENCV flag when building darknet.

Note that building OpenCV from source is typically pretty involved - I made an initial attempt for this project and wasted 8 hours reading error printouts before realizing I didn’t need all the enhancements above and beyond the Python wrappers you can use from pip install.

Configure and Test the Flask Server Locally

Ok - we are almost ready to stream some video! Within the Cat-Cam repo find the config.py file to update some settings.

web_page_title: Use this to modify the title text shown on the webpage and browser tab.fps: Frames per second the video stream will be limited to. I found that serving the stream over cellular data when out of the local network would introduce a fair bit of lag and setting this ~ 5 will make it stay in sync.yolo_path: Path to the YOLOV3.cfg, YOLOV3.weights files on your Raspberry Piemail_object_classes: The class names you want email notifications about when there is a motion capture. Check the file coco.names in thedarknet/cfgfolder for all the options. Emails are only sent when one of these classes is detected (I was getting a fair few emails about chairs being detected before I put this in ;) )

For the email notifications - we should also configure the credentials to send the email through. Sign up for a new Gmail account and turn on access for less secure apps..

Then use the example_credentials.py for formatting, filling out the email and password you want to send the notifications using (GMAIL_EMAIL, GMAIL_PWD) and the email you’ll send them to (aptly named RECEIVER_EMAIL).

Save this file as credentials.py in the cat-cam folder. This file is not version controlled - also note that storing username/password combos in plain text is typically not recommended. Consider only doing this if you make a dedicated Gmail account for this purpose.

Next, lets test the install using Flask’s built in development server. From within the cat-cam folder, we want to activate the virtualenv Python interpreter, and use app.py as our launch point:

$ workon cv

(cv) $ python app.py

You should then see something like the following - note the handy warning about not using this server in a production environment.

At this point - you hopefully already know the IP address of your Raspberry Pi ( if not you can run ifconfig from the terminal and note the entry next to inet under the wlan0 section of the output).

With this IP address in hand and the development server running on the Pi - you can enter the IP address + port of the Pi in the address bar of a Internet browser on another computer on the Wifi network. Ensure to use http:// and not https:// here. As an example, my Pi is at IP address 192.168.0.13 and the default port for Flask’s development server is 8000 so I would use:

http://192.168.0.13:8000

You should see something like the following (your title instead of Winnie Cam hopefully):

Streaming Video With Flask - A New Hope

Most of the Flask app here is based on a post from creator of the bible of Flask development: Miguel Grinberg.

Lots of websites out there have stock Flask code that demonstrate streaming MJPEG from a webcam - but the post here discusses fixes for numerous issues typical of these approaches. The main performance improvement here being synchronizing the process capturing the frames with the process that is serving these frames to clients. The magic here happens in the CameraEvent class within base_camera.py.

From this change - the CPU usage on the Pi goes from ~ 90% down to ~10% with one client (web page) requesting frames. This made a major change to cooling the Pi as running at near full CPU utilization was quickly nearing the limit of it’s passive cooling capacity.

Motion Detection and Object Detection Workflow

The motion detection code in motion_detection/singlemotiondetection.py maintains a weighted rolling average background frame of the pixel intensities over the previous 10 frames and thresholds each frames difference. Any pixels with changes > this threshold are contoured, post processed (erosion/dilation) and a bounding box containing all the differences is returned.

On motion being detected - an image last_movement.jpg is saved out to the root of the cat-cam directory which is then a trigger for our object detection workflow using YOLOV3.

The object detection and email process runs in a separate script to allow the Flask front end to be separate from the code running the YOLO network. The file yolo_email.py watches the last_movement.jpg file for changes every second and on the file being changed runs the motion capture image through the YOLOV3 network writing out prediction.jpg once complete. If the specified classes of objects are detected - this image is then attached and sent using the email configured in credentials.py.

If the “Turn Off Emails/Turn On Emails” buttons have been toggled from the web page - the file email_switch.csv is written to with column status = email_off/email_on.

Production with Gunicorn and Nginx

After comfortably ignoring the warning about not using the Flask development server for a few months - I decided to see what using more production ready frameworks would entail. This was mainly done as a learning exercise considering this Flask app will see peak traffic of ~ 2 users.

With this being my first attempt at fully deploying something on a Python web stack I found this link helpful in wrapping my head around the role of Flask/Gunicorn/Nginx in deployment.

Here’s the quick overview of what we’ll be setting up:

Source: https://circus.readthedocs.io/en/latest/_images/classical-stack.png

Source: https://circus.readthedocs.io/en/latest/_images/classical-stack.png

The client here signifies any browser that would be accessing the web page - supervisord is a Linux tool that monitors our Gunicorn app to ensure it is always running or restart it if the Raspberry Pi reboots.

Gunicorn Deployment

Gunicorn is a popular web server gateway interface (WSGI) server for Python. It essentially formalizes passing requests to the Flask app and handles assigning load to workers. Synchronous and asynchronous workers can be used to allow the app to scale.

With asynchronous workers - we can have multiple windows of the webcam stream open and should have no issues with performance in case we wanted to use this for something with a larger audience. I’ve configured the app to use Gevent asynchronous workers.

In the initial Python virtualenv setup, we have installed Gunicorn already so we can test the Flask app being run through Gunicorn with:

$ cd cat-cam

$ /home/pi/.virtualenvs/cv/bin/gunicorn --worker-class gevent --workers 1 --bind 0.0.0.0:8000 app:app

Replace the path above with the correct location of Gunicorn within your virtual environment bin folder

This should start the app and spawn the worker process. To continue testing we need to setup Nginx in front of Gunicorn to be able to access the app. Press Ctrl + C twice to stop the Gunicorn process.

If you’re using an IDE with remote development capabilities, you can forward the port from the Pi to your local machine and then test the Gunicorn deployment through the browser. Using VS Code for example, the process can be found here

Nginx Config

Next, we’ll setup Nginx (pronounced engine-x) as a reverse proxy to handle incoming requests over port 80 for standard HTTP requests and port 443 for HTTPS. We’ll forward requests aimed at port 80 to port 443 to make all traffic encrypted.

For HTTPS we need a SSL certificate - this is a file that guarantees a website owns the domain and is issued by a certificate authority. It also is used in encrypting the communication between your browser and the domain. For this project, we can create a self signed SSL certificate - this is only good for prototyping, sending the link to other people will raise the security warning:

Not clicking through to websites like this has mostly been ingrained these days - but in this case it is how we’ll access the website.

We can get a SSL cert with the following:

$ cd cat-cam

$ mkdir certs

$ openssl req -new -newkey rsa:4096 -days 365 -nodes -x509\

-keyout cert/key.pem -out certs/cert.pem

Next lets get Nginx:

$ sudo apt update

$ sudo apt install nginx

This should install Nginx and will require confirmation before installing.

Next we just need to setup the Nginx config file. Here’s the example config I’ve put in the repo as a starting point - the main points that need updating are any of the paths mentioning “cat-cam”. These need to reflect your install directory for cat-cam and where you’ve put the SSL certificates.

server {

# listen on port 80 (http)

listen 80;

server_name _;

location / {

# redirect any requests to the same URL but on https

return 301 https://$host$request_uri;

}

}

server {

# listen on port 443 (https)

listen 443 ssl;

server_name _;

# location of the self-signed SSL certificate

# UPDATE

ssl_certificate /home/pi/cat-cam/certs/cert.pem;

ssl_certificate_key /home/pi/cat-cam/certs/key.pem;

# write access and error logs to /var/log

# UPDATE

access_log /var/log/cat_cam_access.log;

error_log /var/log/cat_cam_error.log;

location / {

# forward application requests to the gunicorn server.

proxy_pass http://0.0.0.0:8000;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

#Block for passwords restriction

auth_basic "Restricted Content";

auth_basic_user_file /etc/nginx/.htpasswd;

}

location /static {

# handle static files directly, without forwarding to the application

# UPDATE

alias /home/pi/cat-cam/app/static;

expires 30d;

}

}

After updating the config for your install paths - save it into: etc/nginx/sites-enabled/ as cat-cam.conf. Reload Nginx for the new config using:

$ sudo /etc/init.d/nginx reload

We’ll also configure a password to access the website - combining this with SSL means that we won’t be communicating passwords unencrypted with the server but I would still have hesitations calling this perfectly secure. I suggest using a very strong password and keeping the webcam aimed at a corner of the room when not in use.

Setup a password using the following - we are going to store it in the /etc/nginx/.htpasswd to match the config above.

$ sudo sh -c "echo -n 'your_desired_username_here:' >> /etc/nginx/.htpasswd"

$ sudo sh -c "openssl passwd -apr1 >> /etc/nginx/.htpasswd"

The second command will prompt you for your password. After completing this step we should have full authentication on the website.

Setup Supervisor Tasks

Next, we want to setup a process to watch the web server, and YOLO email script so that they are both restarted on reboot or if either process crashes. To do this we use Supervisor, a process control system meant for managing long running scripts or tasks.

Install Supervisor with:

$ sudo apt-get install supervisor

Once this is done running - start it up using:

$ sudo service supervisor restart

We just need to build config files for the processes we want it to watch - I’ve provided examples in the repo: example_supervisor_conf_gunicorn and example_supervisor_conf_yolo_email. This is what the configuration will look like for managing the web app process:

[program:winnie_cam]

command=/home/pi/.virtualenvs/cv/bin/gunicorn --worker-class gevent --workers 1 --bind 0.0.0.0:8000 app:app

directory=/home/pi/cat-cam

user=pi

autostart=true

autorestart=true

stopasgroup=true

killasgroup=true

Here you’ll just want to update the /home/pi/.virtualenvs/cv/bin/gunicorn line to point to the location of your gunicorn install. If you installed gunicorn into a virtualenv have a look in the bin folder of the virtualenv.

Update the directory as well to point to where you cloned the cat-cam repo to. Finally, double check that user=pi is correct for your situation (i.e you haven’t changed your user name from the default on Raspberry Pi).

For the YOLO/email process, we can use the following:

[program:yolo_email]

command=/home/pi/.virtualenvs/cv/bin/python3 /home/pi/cat-cam/yolo_email.py

directory=/home/pi/cat-cam

user=pi

autostart=true

autorestart=true

stopasgroup=true

killasgroup=true

Similarily here, update the command line to use the Python 3 executable that has all the requirements installed. Again, if using a virtualenv, this will be inside the bin folder of the virtualenv. Update the argument of the command to point to the yolo_email.py script where you installed the cat-cam repo.

Update the directory as well to point to where you cloned the cat-cam repo to. Again, double check that user=pi is correct for your situation.

Copy both of these files into: etc/supervisor/conf.d/ naming them cat-cam.conf and yolo_email.conf.

Tell Supervisor to restart and start managing the new processes using:

$ sudo supervisorctl reread

$ sudo supervisorctl update

There is lots of resources online about utilizing Supervisor to get status updates from processes, and write log files amongst other functionality.

Make sure the Nginx and Supervisor setup has worked by visiting the internal IP address of your Raspberry Pi again. This time you shouldn’t have to specify port 8000 as Nginx will proxy your request to your web app. The password login box should also pop up to confirm your identity. After logging in you should see something similar to:

Security

At this point we are almost there!

There are a few tweaks we can make, before opening up ports to the outside world. We’ll make sure that we only access the Raspberry Pi using SSH and disable password logins. We’ll also ensure a firewall is setup to block all ports other than the ones we are using for the cat-cam app.

Note: if you don’t want to enable the functionality of accessing the live web cam stream from outside your local Wi-fi, stop here! The motion detection -> YOLO classification and email will still work to alert you of anything happening at home. You can also access the website from within your local Wi-fi to turn on/turn off the email alerts before leaving the house. Just ensure Supervisor has started watching the two processes and you should be good to go.

Disable Password Logins to Pi

At this point - if we want the Raspberry Pi to be more secure, we want to ensure we are only using SSH to login and we aren’t using a root account. The pi user on a Raspberry Pi is a non root user by default. Not using a root user all the time means less chance of malicious actors being able to gain access to all your data and execute arbitrary code as easily (there are still hazards having sudo privileges, but it is still slightly more secure). Disabling passwords prevents brute force password guessing.

If you are using SSH to work on your Pi already as the pi user, we just need to disable root logins, and password logins by editing etc/ssh/sshd_config. Using sudo privileges, this is quick using the built in text editer nano:

$ sudo nano etc/ssh/sshd_config

Find and change the following two lines to “no”:

PermitRootLogin no

PasswordAuthentication no

Restart the SSH service using:

$ sudo service ssh restart

UFW Firewall Check

Next, we get the uncomplicated firewall or UFW setup. Install UFW and configure it with:

$ sudo apt-get install -y ufw

$ sudo ufw allow ssh

$ sudo ufw allow http

$ sudo ufw allow 443/tcp

This is only going to allow port 22 (SSH), port 80 (HTTP), and port 443 (HTTPS) to accept connections. In the Nginx configuration above, port 80 is going to be forwarded to port 443 and then be proxied by Nginx through to port 8000 where the Flask app is running by default.

Check that the firewall is running and the rules make sense with:

$ sudo ufw --force enable

$ sudo ufw status

Which should produce the following:

Last login: Mon Nov 16 14:57:18 2020 from 192.168.0.11

pi@raspberrypi:~$ sudo ufw status

Status: active

To Action From

-- ------ ----

22/tcp ALLOW Anywhere

80/tcp ALLOW Anywhere

443/tcp ALLOW Anywhere

22/tcp (v6) ALLOW Anywhere (v6)

80/tcp (v6) ALLOW Anywhere (v6)

443/tcp (v6) ALLOW Anywhere (v6)

Setup Static IP on Raspberry Pi/ Router IP Address Reservation

Next we just need to ensure the Raspberry Pi is always where we want it to be on our local network. This will mean we set the IP address on the Pi, and then reserve that IP address in your router configuration so it doesn’t try to assign your Pi’s address to another device.

First grab your router’s internal IP address (how devices already on your Wifi identify the router) using:

$ ip r | grep default

This will produce something like the following:

default via 192.168.0.1 dev wlan0 src 192.168.0.13 metric 303

Make a note of the first IP address - this is your router’s internal IP address (192.168.0.1 above). Also record the second IP address, this is your Raspberry Pi’s current address (192.168.0.13 above).

Now we change the config file that tell’s your Pi what IP addresses it should use, open the file with nano:

$ sudo nano /etc/dhcpcd.conf

Add the following lines (they may already be commented out near the bottom):

# If configuring for wireless, use 'wlan0', for ethernet cable use 'eth0'

interface wlan0

static ip_address=<YOUR_RASPBERRY_PI_IP>/24

static routers=<EXISTING_ROUTER_INTERNAL_IP>

static domain_name_servers=<EXISTING_ROUTER_INTERNAL_IP>

Press Ctrl + X, then Y, then Enter to save changes.

Reboot the Pi and you should have a static IP address!

IP Reservation + Port Forwarding from Router

Ok - a little bit of GUI clicking and we are home free. We’ll setup the Raspberry Pi to always get the same IP address, and open a port to external traffic (i.e you, on your phone away from the house).

Use the IP address of your router that you noted above - you should see a login screen of some sort. The best step here is checking your router’s physical box for a sticker with the admin password. Or maybe you changed it yourself already! In a pinch, use some Google-fu and find the defaults for your model of router.

The tricky part here is that all router configuration software is different. You are looking for something mentioning “LAN” (Local Area Network). Within this section there should be settings for DHCP that will allow for manually specifying IP addresses. Routers will refer to all the devices by their MAC address, look up your Raspberry Pi MAC address and assign the IP address you made static on the Raspberry Pi in the last step.

My router’s configuration page for IP address assignment

My router’s configuration page for IP address assignment

Next we are going to forward the ports we opened up (80 for HTTP and 443 for HTTPS) to the Raspberry Pi. Look for a section under the WAN that mentions port forwarding, you’ll use the interal IP address for the Raspberry Pi from the previous steps (mine in the examples here is 192.168.0.13) to make records for forwarding the respective ports.

Port forward settings on my router

Port forward settings on my router

Finally, we need the external IP address of the router. This is what address you’ll visit in your internet browser to access the webcam feed outside of the Wifi network. This is typically under the WAN section and will be listed as WAN IP but this can really depend on your router model. If in doubt, a little Googling of your router model will get you there.

Next Steps

I’ll Dockerize this whole process at some point and improve the process for running the image through the YOLOV3 step. Let me know if you have any issues on the repo!

Dustin Andrews is an engineer and data scientist in Calgary, Alberta. Code, the outdoors and sometimes ceramics.